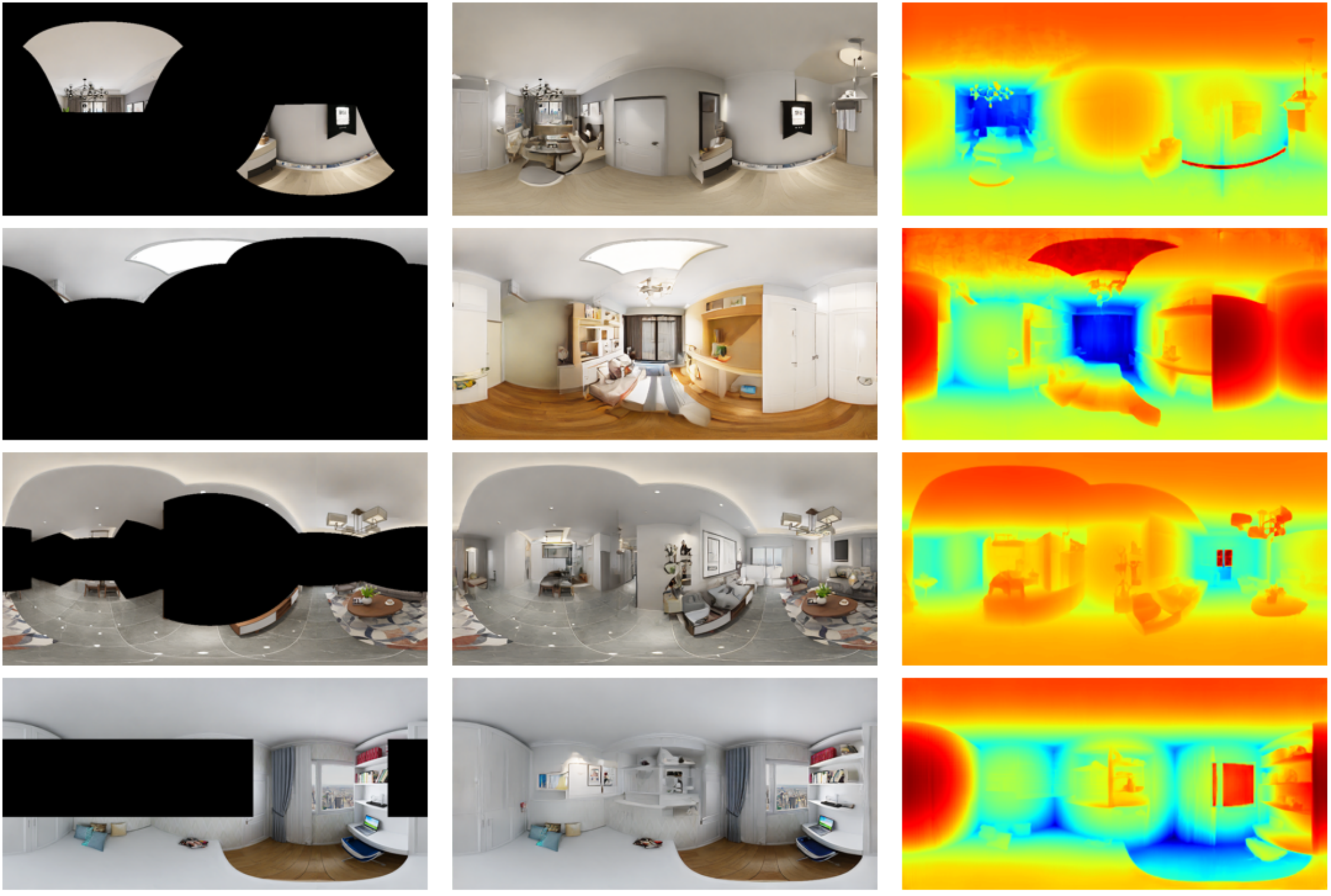

Generating complete 360° panoramas from narrow field of view images is

ongoing research as omnidirectional RGB data is not readily available. Existing GAN-based

approaches face some barriers to achieving higher quality output, and have poor

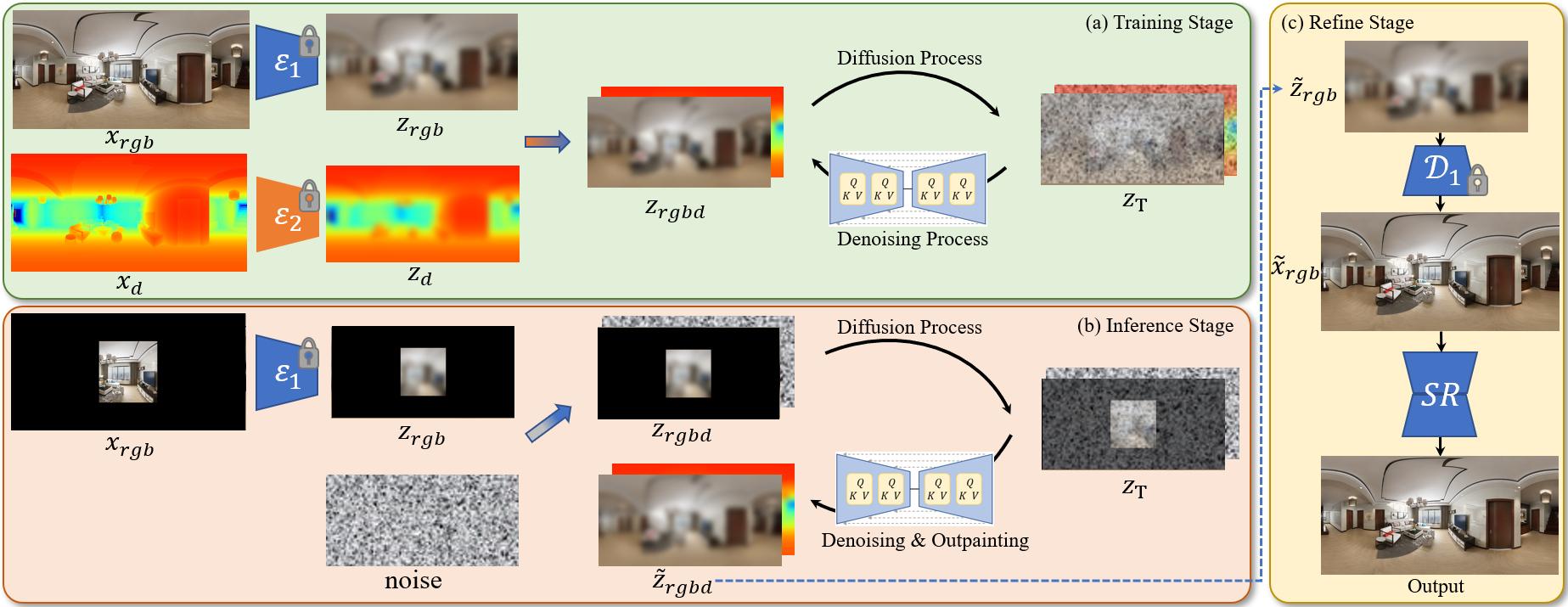

generalization performance over different mask types. In this paper, we present our

360° indoor RGB panorama outpainting model using latent diffusion models (LDM),

called PanoDiffusion. We introduce a new bi-modal latent diffusion structure that utilizes

both RGB and depth panoramic data during training, which works surprisingly well to outpaint

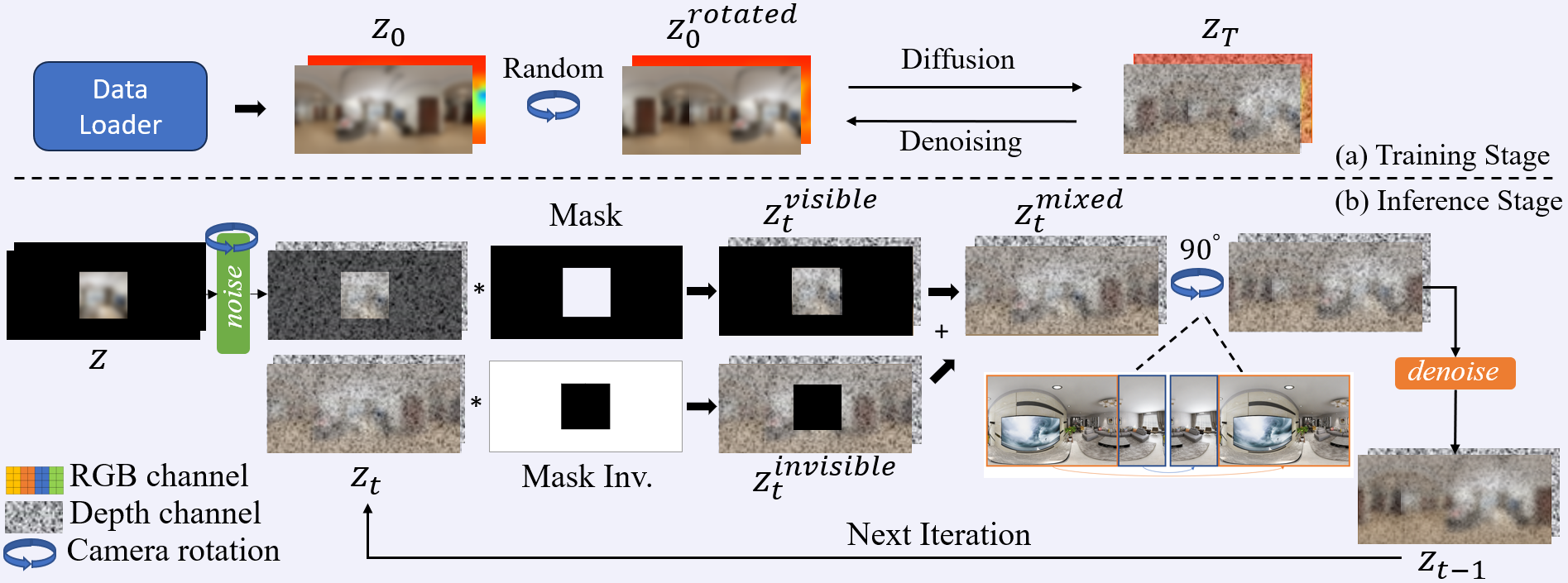

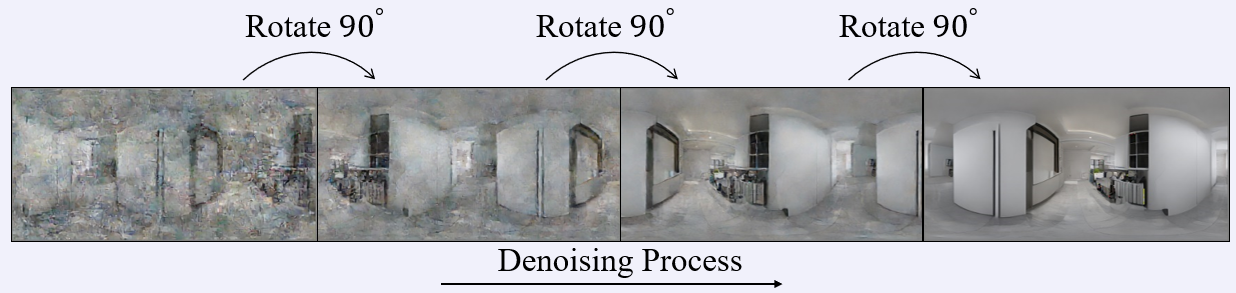

depth-free RGB images during inference. We further propose a novel technique of introducing

progressive camera rotations during each diffusion denoising step, which leads to substantial

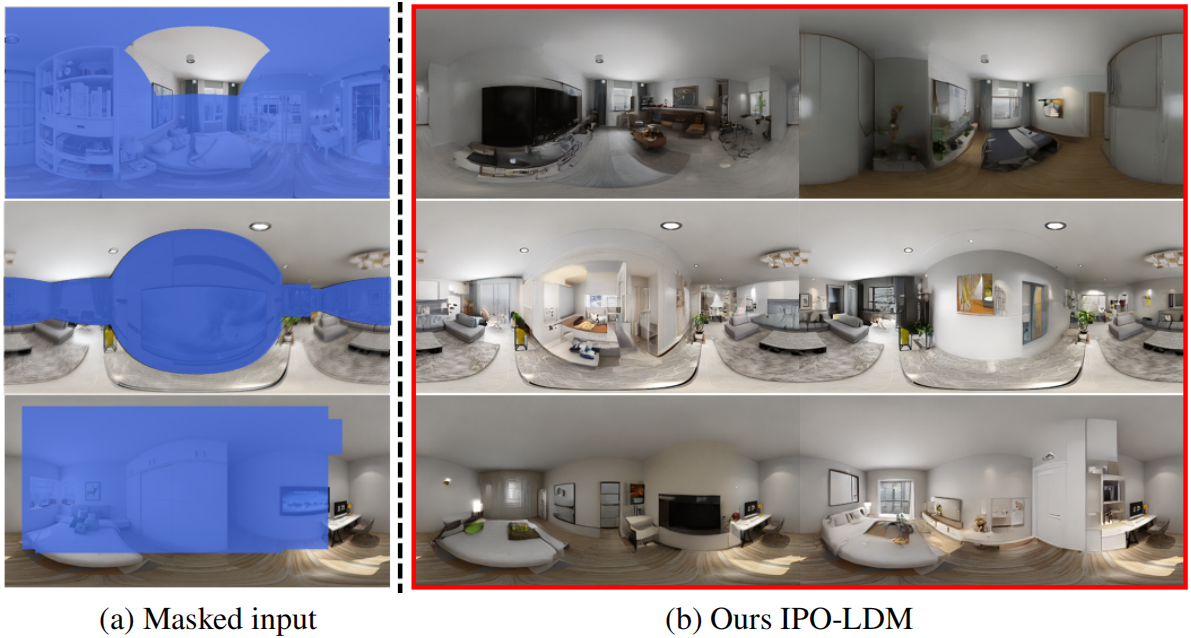

improvement in achieving panorama wraparound consistency. Results show that our PanoDiffusion

not only significantly outperforms state-of-the-art methods on RGB-D panorama outpainting by producing

diverse well-structured results for different types of masks, but can also synthesize high-quality depth

panoramas to provide realistic 3D indoor models.

Model Designs

Model Designs